02.23.06

Posted in Maps, Technology trends at 11:06 pm by ducky

Because all the data associated with Google Maps goes through Google, they can keep track of that information. If they wanted to, they could store enough information to tell you what the most map markers within two miles of 1212 W. Springfield, Urbana, Illinois were. Maybe one would be from Joe’s Favorite Bars mashup and maybe one would be from the Museums of the World mashup. Maybe fifty would show buildings on the university of Illinois campus from the official UIUC mashup, and maybe two would be from Josie’s History of Computing mashup.

Google could of course then use that mashup data in their location-sensitive queries, so if I asked for “history computing urbana il”, they would give me Josie’s links instead of returning the Urbana Free Library. (They would need to be careful in how they did this in a way that didn’t tromp on Josie, if they want to stick to their “Don’t be evil” motto.)

This is another argument for why they should recognize a vested interest in making it easy for developers to add their own area-based data. If Google allows people to easily put up information about specific polygons, then Google can search those polygons. Right now, because I had to do my maps as overlays, Google can’t pull any information out of them.

If Google makes polygons and their corresponding data easy to name, identify, and access, they will be able to do very powerful things in the future.

Addendum: I haven’t reverse-engineered the Google Maps javascript — I realized that it’s quite possible that the marker overlays are all done on the client side. (Desirable and likely, in fact.) In that case, they wouldn’t have the data. However, it would be trivial to insert some code to send information about the markers up to the server. Would that be evil? I’m not sure.

Permalink

02.17.06

Posted in Hacking, Maps, Technology trends at 2:27 pm by ducky

I was in San Jose when the 1989 Loma Prieta earthquake hit, and I remember that nobody knew what was going on for several days. I have an idea for how to disseminate information better in a disaster, leveraging the power of the Internet and the masses.

I envision a set of maps associated with a disaster: ones for the status of phone, water, natural gas, electricity, sewer, current safety risks, etc. For example, where the phones are working just fine, the phone map shows green. Where the phone system is up, but the lines are overloaded, the phone map shows yellow. Where the phones are completely dead, the phone map shows red. Where the electricity is out, the power map shows red.

To make a report, someone with knowledge — let’s call her Betsy — would go to the disaster site, click on a location, and see a very simple pop-up form asking about phone, water, gas, electricity, etc. She would fill in what she knows about that location, and submit. That information would go to several sets of servers (geographically distributed so that they won’t all go out simultaneously), which would stuff the update in their databases. That information would be used to update the maps: a dot would appear at the location Betsy reported.

How does Betsy connect to the Internet, if there’s a disaster?

- She can move herself out of the disaster area. (Many disasters are highly localized.) Perhaps she was downtown, where the phones were out, and then rode her bicycle home, to where everything was fine. She could report on both downtown and her home. Or maybe Betsy is a pilot and overflew the affected area.

- She could be some place unaffected, but take a message from someone in the disaster area. Sometimes there is intermittent communication available, even in a disaster area. After the earthquake, our phone was up but had a busy signal due to so many people calling out. What you are supposed to do in that situation is to make one phone call to someone out of state, and have them contact everybody else. So I would phone Betsy, give her the information, and have her report the information.

- Internet service, because of its very nature, can be very robust. I’ve heard of occasions where people couldn’t use the phones, but could use the Internet.

One obvious concern is about spam or vandalism. I think Wikipedia has shown that with the right tools, community involvement can keep spam and vandalism at a minimum. There would need to be a way for people to question a report and have that reflected in the map. For example, the dot for the report might become more transparent the more people questioned it.

The disaster site could have many more things on it, depending upon the type of disaster: aerial photographs, geology/hydrology maps, information about locations to get help, information about locations to volunteer help, topology maps (useful in floods), etc.

What would be needed to pull this off?

- At least two servers, preferably at least three, that are geographically separated.

- A big honkin’ database that can be synchronized between the servers.

- Presentation servers, which work at displaying the information. There could be a Google Maps version, a Yahoo Maps version, a Microsoft version, etc.

- A way for the database servers and the presentation servers to talk to each other.

- Some sort of governance structure. Somebody is going to have to make decisions about what information is appropriate for that disaster. (Hydrology maps might not be useful in a fire.) Somebody is going to have to be in communication with the presentation servers to coordinate presenting the information. Somebody is going to have to make final decisions on vandalism. This governance structure could be somebody like the International Red Cross or something like the Wikimedia Foundation.

- Buy-in from various institutions to publicize the site in the event of a disaster. There’s no point in the site existing if nobody knows about it, but if Google, Yahoo, MSN, and AOL all put links to the site when a disaster hit, that would be excellent.

I almost did this project for an MS thesis project, but decided against it, so I’m posting the idea here in the hopes that someone could run with it. I don’t foresee having the time myself.

Permalink

Posted in Maps, Random thoughts at 10:38 am by ducky

I’ve found some interesting things with my maps.

It is easy to find:

I was also surprised to see

Permalink

02.16.06

Posted in Maps, Technology trends at 10:40 pm by ducky

I have been working on a Google maps mashup that has been a lot of work. While I might be able to get some benefit from investing more time and energy in this, I kept thinking to myself, “Google could do this so much better themselves if they wanted to. They’ve got the API, they’ve got the bandwidth, they’ve got the computational horsepower.”

Here’s what I’d love to see Google do:

- Make area-based mashups easier. Put polygon generation in the API. Let me feed you XML of the polygon vertices, the data values, and what color mapping I want, and draw it for me. (Note that with version 2 of the API, it might work to use SVG for this. I have to look into that.)

- Make the polygons first-class objects in a separate layer with identities that can feed back into other forms easily. Let me roll over a tract and get its census ID. Let me click on a polygon and pop up a marker with all the census information for that tract.

- Make it easy to combine data from multiple sources. Let me feed you XML of census tract IDs, data values, and color mapping, and tell you that I want to use census tract polygon information (or county polygons, or voting precinct polygons, or …) from some other site, and draw it for me.

- Host polygon information on Google. Let me indicate that I want census tract polygons and draw them for me.

- Provide information visualization tools. Let me indicate that I want to see population density in one map, percent white in another, median income in a third, and housing vacancy rates in a fourth, and synchronize them all together. (I actually had a view like that working, but it is computationally expensive enough that I worry about making it available.) Let me do color maps in two or three dimensions, e.g. hue and opacity.

- Start hosting massive databases. Start with the Census data, then continue on to the Bureau of Labor Statistics, CIA factbook information, USGS maps, state and federal budgets, and voting records. Sure, the information is out there already, but it’s in different formats in different places. Google is one of the few places that has the resources to bring them all together. They could make it easy for me to hook that data easily into information visualization tools.

- Get information from other countries. (This is actually tricky: sometimes governments copyright and charge money for their official data.)

Wouldn’t it be cool to be able to show an animation of the price of bread divided by the median income over a map of Europe from ten years before World War II to ten years after?

So how would Google make any money from this? The most obvious way would be to put ads on the sites that display the data.

A friend of mine pointed out that Google could also charge for the data in the same way that they currently charge for videos on Google Video. Google could either charge the visualization producers, who would then need to extract money from their consumers somehow, or they could charge the consumers of the visualizations.

Who would pay for this information? Politicians. Marketers. Disaster management preparedness organizations. Municipal governments. Historians. Economists. The parents of seventh-graders who desperately need to finish their book report. Lots of people.

Permalink

Posted in Hacking, Maps at 9:40 pm by ducky

I spent essentially all day today working on my Google Maps / U.S. Cenus mashups (instead of working on my Parallel Algorithms homework like I should have).

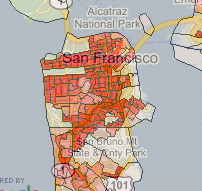

I think it’s pretty cool — I can display a lot of different population-based data overlaid on Google Maps. Here’s a piece of the population density overlay around San Francisco:

You can see Golden Gate park, The Presidio, golf courses, and the commercial district as places where people don’t live.

To the best of my knowledge, this is one of the first area-based Google Maps mashups, and depending on how strict your definition is, it might be the first area-based mashup. (This is opposed to the many point-based mashups, where there are markers at specific points.)

(Addendum: After I wrote the above, someone pointed out area-based satellite reception map. So now I have to say that mine is the probably the first shaded area-based display.)

There might be a good reason why there aren’t many area-based mashups: it’s computationally quite expensive. I’m actually a bit nervous about the possibilities of a success experience. I might really trash my server if a lot of people start banging on it.

I have ideas of how to make it less computationally intensive, including caching data, but it’s not as straightforward as you might think. For example, to precompute and store all of the tiles in the US would take (by my back-of-the-envelope calculation) on the order of 1000G. In expert mode, a user can choose between 36 numerators and 5 denominators for 180 different maps. Furthermore, there are an infinite number of different color mappings that the user could want. That’s more storage space than I want to pay for.

I thought about maybe drawing the polygon information once, and basically filling in the colors later. However, the database lookups are themselves quite intensive. There are 65,677 census tracts in the US. The polygons representing those tracts have 3.5 million vertices between them, but I’m already approximating somewhat: if the pixel area of a tract is too small, I only draw the bounding box of the tract instead of every corner.

I probably want to cache tiles in order of use. That’s pretty simple and straightforward, but I will have to then figure out a caching strategy for getting rid of tiles, or else buy lots and lots and lots of disk space.

Something else that would help a lot would be if people could switch data overlays without losing their lat/long/zoom place. I think I should be able to put links in the sidebar that get dynamically updated whenever the map center changes.

However, all of these things take some time, and I’m getting anxious about how I’ve been neglecting my schoolwork to play with the maps. The maps are more fun.

Permalink

02.15.06

Posted in Gay rights at 12:50 am by ducky

I saw Brokeback Mountain today with my beloved husband. It was a very well-done movie, certainly heart-wrenching, but I didn’t like its message.

I have met a number of people who think that being gay is all about the sex, and this movie could certainly reinforce that stereotype. I never saw the protagonists being emotionally intimate with each other, just physically intimate. I never saw them talk, I never saw them make any sort of commitment to each other. They seemed about as emotionally intimate with each other as they were with their wives, maybe less so.

My beloved husband disagrees with me. He thinks that the lack of emotional intimacy or commitment was just a reflection of how badly messed up they were as a result of society’s horribly ill treatment of gay people.

Regardless, the movie made me feel very lucky to be married to my beloved husband: lucky that society approves of our relationship and lucky that he communicates more and better than the protagonists in the film.

Permalink

Posted in Hacking at 9:10 pm by ducky

I’m transferring all of my old blog postings into WordPress.

This might take a while, and might be sort of random for a few days…

Permalink

02.07.06

Posted in Random thoughts at 12:15 am by ducky

The title of the last post reminded me that I’ve been curious about something for a long time:

Is there alphabet soup in places that don’t use the Latin alphabet?

Fortunately, I live at Green College, home to many well-traveled people. I asked our internal mailing list, and presto! I had an answer: no.

Permalink

02.06.06

Posted in Technology trends at 6:08 pm by ducky

There has been a lot of buzz in the past few years about Web services: XML, WDSL, REST, SOAP, WDFL, that I just don’t find very exciting. People seem to think that Life Will Be Better when everybody and their sister provides access to their data (aka web services) with {insert your favorite format and transport layer}, and you’ll get interesting combinations of data.

I admit, there is a really good example of combining two different services: Housing Maps is an absolutely brilliant combination of craigslist and Google Maps. However, I don’t think we are going to see a lot of Web-based mashups like that. Housingmaps.com is particularly interesting because there are so few mashups like it!

There is little economic incentive to provide a service that other people can mash up. It costs money to provide data, and there aren’t very many ways to recoup the cost of publishing data:

- Sell advertisements on the page where you display the data (e.g. Google)

- Charge for access to the information (e.g. a subscription charge)

- Reduce the costs of providing your core business (e.g. a SOAP order-taking app is cheaper than a human entering data)

- Get the taxpayer to pay for it (e.g. NOAA providing weather information)

Very few of these lead to more data becoming available for mashup.

- If party A provides the data and party B displays it on a web page, party A bears the cost and party B gets the advertising revenue. No incentive for A.

- If party A charges a subscription and party B displays it, then party B is then effectively giving away access to party A’s information, which is not in party A’s interest (unless the data is so valuable to B that B is willing for A to charge by the access). (Party B gets the advertising revenue, goodwill, and/or publicity, not party A.)

- There is lots and lots of room for businesses to streamline their transactions with other companies, but little reason for businesses to make that information generally available. Walmart might insist that Pepsi set up a WDFL system for Walmart so they can order sodapop more easily, but I really doubt that Pepsi wants to take orders for sodapop from me. Walmart might insist that Coke set up a system where Walmart can get pricing on Coke products, but I bet Coke doesn’t want Pepsi to be able to access Coke’s prices.

- There is quite a lot of really good information that governments and non-profits/NGOs provide, I grant you that. The U.S. Census Bureau, for example, has a lot of really juicy data. However, I haven’t noticed goverments making a point of expanding their services lately. Kind of the reverse. Furthermore, even government offices like publicity, so I think they’d still rather you looked at their Web pages instead of someone else’s.

Looking around, I don’t see a whole lot of commercial entities making data available for free. Google Maps and craigslist do, it’s true. (I’m not really sure why.) Google has a search API that they let people use if they register and only for 1000 searches per day. (I suspect that those are “free samples” to get people hooked, and that they are hoping to later get people to pay money for additional searches.)

There is a very small amount of publicly available data in WDSL form. It is small enough that the services available just don’t lend themselves to interesting mashups. I just don’t see value in combining golf handicapping, northern Ireland holiday calendar, and Canadian geocoding services.

Note that even the cool housingmaps.com mashup doesn’t use WDSL or SOAP or any of the acronyms-du-jour. As best as I can tell, housingmaps.com gets the craigslist information by scraping HTML; Google Maps has its own API.

It would be really cool if there were all kinds of web services that were freely available for anybody to mash up. But I’m not holding my breath.

Permalink

« Previous entries Next Page » Next Page »