12.15.06

Review: Ko's An exploratory study of how developers seek, relate, and collect relevant information during software maintenance tasks

I found another paper that has some data on variability of programmers’ productivity, and again it shows that the differences between programmers isn’t as big as the “common wisdom” made me think.

In An Exploratory Study of How Developers Seek, Relate, and Collect Relevant Information during Software Maintenance Tasks, Andrew Ko et al report on the times that ten experienced programmers spent on five (relatively simple tasks). They give the average time spent as well as the standard deviation, and — like the Curtis results I mentioned before. (Note, however, that I believe Ko et al include programmers who didn’t finish in the allotted time. This will make the average lower than it would be if they waited for people to finish, and make the standard deviation appear smaller than it really is.)

| Task name | Average time | Standard deviation | std dev / avg |

|---|---|---|---|

| Thickness | 17 | 8 | 47% |

| Yellow | 10 | 8 | 80% |

| Undo | 6 | 5 | 83% |

| Line | 22 | 12 | 55% |

| Scroll | 64 | 55 | 76% |

These numbers are the same order of magnitude as the Curtis numbers (which were 53% and 87% for the two tasks).

Ko et al don’t break out the time spent per-person, but they do break out actions per person. (They counted actions by going through screen-video-captures, ugh, tedious!) While this won’t be an exact reflection of time, it presumably is related: I would be really surprised if 12 actions took longer than 208 actions.

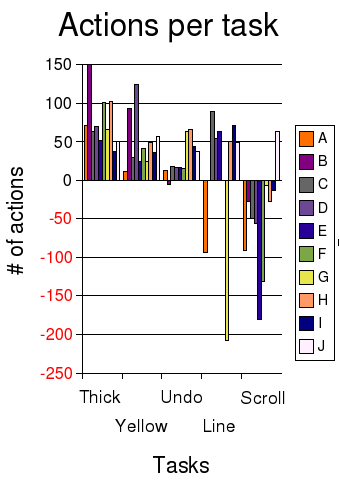

I’ve plotted the number of actions per task in a bar graph, with each color representing a different coder. The y-axis shows the time spent on each task, with negative numbers if they didn’t successfully complete the task. (Click on the image to see a wider version.)

Looking at the action results closely, I was struck by the impossibility of ranking the programmers.

- Coder J (white) was the only one who finished the SCROLL task (and so the only one who finished all five), so you’d think that J was really hot stuff. However, if you look at J’s performance on other tasks, it was solid but not overwhelmingly fast.

- Coder A (orange) was really fast on YELLOW and UNDO, so maybe Coder A is hot stuff. However, Coder A spun his or her wheels badly on the LINE task and never finished.

- Coder G (yellow) got badly badly stuck on LINE as well, but did a very credible job on most of the other tasks, and it looks like he or she knew enough to not waste too much time on SCROLL.

- Coder B (light purple) spent a lot of actions on THICKNESS and YELLOW, and didn’t finish UNDO or SCROLL, but B got LINE, while A didn’t.

One thing that is very clear is that there is regression to the mean, i.e. the variability on all the tasks collectively is smaller than the variability on just one task. People seem to get stuck on some things and do well on others, and it’s kind of random which ones they do well/poorly on. If you look the coders who all finished THICKNESS, YELLOW, UNDO, and SCROLL, the spread is higher on the individual tests than the aggregate:

- The standard deviation of the number of actions taken divided by the average number of actions is 36%, 69%, 60%, and 25%, respectively.

- If you look at the number of actions each developer takes to do *all* tasks, however, then the standard deviation is only 21% of the average.

- The most actions taken (“slowest”) divided by the least actions (“fastest”) is 37%, 20%, 26%, and 54% respectively.

- The overall “fastest” coder did 59% as many actions as the “slowest” overall coder.

It also seemed like there was more of a difference between the median coder and the bottom than between the median and the top. This isn’t surprising: there are limits to how quickly you can solve a problem, but no limits to how slowly you can do something.

What I take from this is that when making hiring decisions, it is not as important to get the top 1% of programmers as it is to avoid getting the bottom 50%. When managing people, it is really important to create a culture where people will give to and get help from each other to minimize the time that people are stuck.

Best Webfoot Forward » Programmer productivity — part 3 said,

February 15, 2007 at 7:06 pm

[…] My recent reflections on the Curtis results and reflections on the Ko et al results of experiments of programmer productivity have focused on one narrow slice, what I call “hands-on-keyboard”. Hands-on-keyboard productivity is measured by how fast someone who is given a small, well-defined task can do that task. As I mentioned in those two blog posts, it is hard to measure even that simple thing. […]

gpoo said,

May 15, 2007 at 7:00 pm

The table it seems wrong.

The values you had taken belongs to table 3, page 975 of the paper. However, the average time for scroll was 17 minutes. 64.5 was the average of actions for that activity.

Moreover, 70 minutes was given to each developer to do 5 tasks. So, having 64 minutes as average means they had only 6 minutes for the rest of the tasks which is contradictory according the rest of the table.

The same happens with the standar deviation. +/- 13 minutes instead of +/- 55 minutes.

Regards,

Best Webfoot Forward » Review: Dickey on Sackman (via Bowden) said,

June 14, 2007 at 4:22 pm

[…] small sample size hurts, but (as in the Curtis data and the Ko data) I don’t see an order of magnitude difference in completion […]

Best Webfoot Forward » VanDev talk summary said,

February 6, 2008 at 9:29 pm

[…] I have reported previously on experiments by Demarco and Lister, Dickey, Sachman, Curtis, and Ko which measure the time for a number of programmers to do a task. What I found is that the time […]

Paul said,

January 28, 2012 at 9:40 pm

“…it is really important to create a culture where people will give to and get help from each other to minimize the time that people are stuck.”

Pair Programming 🙂