05.28.07

Posted in programmer productivity at 4:38 pm by ducky

As I mentioned before, I saw two coders working on the same task using the same navigational style take very very different lengths of time. While tracing forward through code, one happened to pick the correct choice from two equally plausible choices; one happened to pick the wrong one and get off into the weeds.

This makes me even more convinced that the important thing when coding is to not get stuck. It also said to me that a more breadth-first search (BFS) strategy (where you explore each path for a little ways before examining any path in depth) was a better idea than depth-first search (DFS). This got me thinking about why my pal didn’t do BFS, why he spent 30 minutes off in the weeds.

Eclipse is really bad at supporting BFS. Some of the things it does are generically bad, but some are badnesses all of its own.

- The tabs jump around a lot. Eclipse currently has a strange way of dealing with its tabs. There is a way to get tabbing behavior where it doesn’t jump around a lot — more like Firefox, but it is not the default. (Window->Preferences->General->Editors-> put a check in “Close editors automatically:”, set “Number of opened editors before closing” to 1, and select the radio button “Open new editor”. Now there will be a little “pin” icon to the right of the forward-history button that you can use to say “Don’t close this pane”.)

- Eclipse doesn’t have a per-tab/page history like Firefox does. All your history gets jumbled together. This means that you can’t use tabs/pages to help you keep track of individual tasks.

- It’s difficult to mark points for future reference. There are bookmarks in Eclipse, but most people don’t know about them. Even if you are one of the few and proud who knows about bookmarks, they are hard to use. It would be nice if in the history (which of course would be per-tab), you could see where you had bookmarks.

Many of the real stud-god hackers still use vi and emacs, and after doing all this thinking about BFS, I can see why. Emacs and vi plus xterms have really good facilities for keeping track of various streams/stacks of code traversals. If you decide you are at a decision point, where you don’t know which of two paths you should take, just open up a new xterm/editor, load up the file, and move that screen off to the side. Move it somewhere where you can still see it and remember that it is there, but where it isn’t in your way.

Inside each editor session, the editor provides good tools for quickly going forwards or backwards in the code, and that history never gets muddied with any other path that you are traversing.

Permalink

05.26.07

Posted in Hacking, Maps at 5:49 pm by ducky

I just open-sourced the code for Mapeteria. If any of you are PHP4 gods, I have a few questions…

Permalink

Posted in Technology trends at 10:34 am by ducky

Robert X. Cringely has an article where he says that Google will ultimately be killed off by employees who have left to start their own company.

While I don’t own any crystal balls, I don’t think Google is as doomed as he does. His reasoning is that the 20% time Googlers have to work on other projects will result in 4,000 new product ideas per year, of which 400 will be good, but only about ten of which can be turned into new products. He says that the people who had the other 390 will be bitter and go start their own companies.

I have to argue with some of the math.

- Not all 20% projects are for new products. I bet most aren’t, in fact. If I were to start working at Google tomorrow, I would probably try to work on:

- user-generated thematic maps

- spam reduction

- better contact management in Gmail

- programmer productivity tools

- a to-do list manager

80% of the the projects on my list are either internal tools or add-ons to some existing product. It is way, way easier to think of a feature enhancement than a completely new product. I would be really surprised (and disappointed) if they aren’t already working on a to-do list manager, so my list probably has 0% new products.

- Not everybody will be working alone. If, for example, I started a new to-do list manager, there is no way that I would be able to productize it all myself in 20% time. I would want to recruit others to help me on it. This means that there will be fewer ideas than people.

- One of Google’s great strengths is its hardware infrastructure. Their 2006 financial statement showed $2.4 billion (yes, Billion with a B) worth of property and equipment assets. That gives the potential defectors a reason to stay: they have a whole lot more toys to play with if they stay (and a real disadvantage if they try to compete directly with Google).

- I never worked on a 20% project, so I don’t know if they ever get canceled. I suspect that it’s very rare that you’d get told that you had to stop working on it. Thus if you really believed in something, you’d keep working on it as a 20% project because you were sure that if you just added a frobnitz, then the powers that be would see how incredibly cool it was, and would push it. Eventually, something else that was shiny would come along and you’d put aside your wonderful thing just for a bit… and your project would just wither away.

- Working at Google is awfully pleasant. In addition to the food and stuff, you get to hang out with really nice, really smart people, and other people take care of nuisances like facilities, payroll, tech support, etc. You get to work on fun stuff that you want to do. Why would you ever leave?

While Cringely figures there will be 390 worthwhile projects per year that will get killed, I figure that the number of worthwhile new-product ideas will be less than 20: (3700 coders in FY 2006/ 3 people per team) * (1 new product / 10 projects) * (1 product that is worthwhile / 10 proposed products) = 12 worthwhile products.

In 2006, as near as I can tell, they launched nine new products: GCalendar, GDocuments, GSpreadsheets, GCheckout, SketchUp, GCo-op, and about three versions of existing products for mobile phones.

Only four of the things I mentioned were really new (vs. a port to phones) and came from in-house (vs. acquisitions). GCo-op doesn’t seem all that major to me, so really there were three major new in-house products: GCalendar, GSpreadsheets, and GCheckout. If my estimations are right, then that means that there were nine new products that got orphaned. Probably less than 10% of the people who have orphaned products will leave, so that means less than one project would leave. If that product required Google’s infrastructure, then chances would be even lower that it would escape.

The fact that two of the products that were released in 2006 (SketchUp and GDocuments) came from acquisitions says to me that Google doesn’t have enough new product ideas internally to keep up with the number they can release and support. I don’t know, but I suspect that I was being optimistic in my estimate of new products per 20% project. It’s probably much lower than 10%. This would mean that Google actually has quite a ways to go before they start losing people who are frustrated that their pet project got cancelled.

I expect that there are a non-zero number of people who will quit and start their own companies, but I think that will be because they see an opportunity in an area outside of Google’s business. They will decide to open a restaurant, or consult, or design video games, or set up a charter bus tour company. Some people will step off of the treadmill and raise kids, go into the ministry, or become forest rangers or professional snowboarders. While Google might miss those people, I don’t think that the professional snowboarders will be a threat to Google’s continued existence.

Permalink

05.25.07

Posted in Random thoughts at 6:48 pm by ducky

I have been a bit surprised at something that I can do that apparently most people can’t. I can listen to somebody speaking and repeat everything they say in realtime, with only the briefest of delays between when it comes out of their mouth and when it comes out of mine. From what I hear from others, most people can’t do this.

This is not a skill I’ve practiced. To the best of my knowledge, I have always been able to do this. It never occurred to me that anybody would not be able to do this. (Maybe I can simply because I assumed that I could?)

But if I’m going to have some secret power, couldn’t it be something useful? Like curing cancer? Diagnosing cancer? Or at least being able to cure canker sores?

Or, alternatively, is there any value at all in being able to simultaneously translate from English to English?

Permalink

05.21.07

Posted in Hacking at 10:09 pm by ducky

When I started Mapeteria, it seemed like it would be pretty simple. It turned out to be much more complicated that I had originally envisioned. I wasn’t terribly surprised (I have, after all, spent a long time in industry), but it was a bit annoying.

- Installation hell. It didn’t have to be that bad, but I didn’t know about the xampp project.

- Geographic data. I needed boundary information for countries and states/provinces.

- It was easy to find US data, but it was detailed/complex enough that it was really slow. Fortuitously, I saw Kevin Khaw’s state information, and he let me use it.

- Because Mapeteria makes KML that people could use anywhere, I wanted to be quite certain that I had the right to use the boundary data. I found boundary data for Canada, but it wasn’t absolutely clear to me that I had the right to redistribute it. (Unlike in the US, the Canadian government retains the copyright to governmentally-produced information like maps.) I decided it would be faster to just trace out points on Google Maps and use that for the boundaries. (That also gave me control over the complexity of the polygons.)

- I found country data relatively quickly, but it was complex enough that it was extremely slow to render on Google Maps. I was able to simplify the polygons pretty easily (by modifying a script by John Coryat that he’d adapted from Stephen Lime). Unfortunately, there a zillion little islands in that data, which make it much more complex than it needs to be. I believe that I will have to go remove all the islands by hand, yuck. 😛

- I stumbled upon boundary information for France, thanks to Alexandre Dubois (aka Zakapatul). Because I’d already done simplification for the countries, it was not hard to simplify France, but I still had to do it. I also had to strip a bunch of stuff out of the KML file that I didn’t need (like shields representing each departement).

- Bugs in Other People’s Code.

- I never did get the debugger to work right in PHPEclipse, and I didn’t even have a good idea for how to troubleshoot it. So I just had no debugger. 😛 Echo statements (like printfs) were my friends.

- There is a bug in Google Maps such that polygons that straddle the 180 E/W line are just broken. This makes sense — they are inherently ambiguous. However, Siberia, Fiji, and a Russian island straddle the line, alas.

- There is a bug in Google’s maps that I found when I was tracing the Canadian outlines. It wasn’t a big deal, but I spent non-zero time on it.

- Politics. What if somebody submits a data file with information for the USSR? Or Yugoslavia in 1970? Or Ethiopia in 1950? Or East Germany? I only have boundary information for 2006, not for all possible boundaries for all time.

- Documentation.

- What do I call states/provinces/territories/départments? I spent a fair amount of time trying to figure that out. I could call them “first level administrative divisions”, but the only people who know what that mean are map geeks. I could call them states or provinces or territories, but then I’d tick someone off. I never did figure out what to call them, so I call them states/provinces/territories/departments. 🙁

- What do I call the two-letter, uh two-number, uh two-character codes for states/provinces/territories/départements?

- How much detail do I give? How much is too much?

- Testing. In addition to unit tests, I was (for a period) trying to automate more global tests, but comparing a generated KML to a “golden” KML. However, I kept changing what was “golden” — I would take out or simplify polygons, add some debugging information, change from space-separated points to newline-separated points (and back), such that it was a real pain to keep the tests consistent. Eventually I gave up and just had some “eyeball” tests: does it look right?

- Evangelism.

- Who do I tell? How soon? Do I tell them about countries, even though there is still the bug in Google Maps? Even though countries display very slowly? Lots of time spend wondering about that.

- Open Source. I decided to open-source the code after I was basically done.

- I needed to go through and make my code conform to PHP standards (like using_underscores instead of CamelCase), take out some of my hacks, clean up TODOs.

- I needed to figure out where I was going to host the code. My own server? Sourceforge? Google? None were perfect, alas, so in addition to investigating, I had to do some agonizing, too, before settling on Google hosting.

- I needed to transfer all my bugs from my private Bugzilla to the Google issue tracker.

- I still need to transfer the code, which means installing a Subversion client and figuring out how to use it. It probably won’t take long, it’s something I should do anyways (like eating my vitamins), but it’s One More Thing.

So anyway, it always takes longer than you think it should; I decide to document why this time. 🙂

Permalink

Posted in Hacking, programmer productivity, Technology trends at 8:26 pm by ducky

I have seen a lot of discussion over the years of the relative strengths (or weaknesses) of specific languages. People talk about why they use this language or that language, and they seem to always talk about specific linguistic constructs of their favored language.

“Mine has generics!” “Mine has macro expansion!” “Mine has closures!”

Sometimes the various devotees will give a nod to the richness of their language’s libraries, or to the robustness of their compiler, but rarely.

Recently, I’ve been working on a hobby project in PHP while reading up on tools like odb, JML, Daikon, Esc/Java2, javaspider, and EmmaECL. The contrast is stark.

PHP is stable enough, and seems to have plenty of libraries, but PHPEclipse quite downrev compared to Eclipse, the debugger doesn’t work at all for me (and I don’t now where to start troubleshooting), and there are essentially no additional tools. I feel like a starving dieter writing reviews for a gourmet food magazine: shackled to PHP and pining for the abundance of the Java tools.

Java’s advantages in the tool arena aren’t accidental.

- Its static typing and no pointers makes a lot of tools easier to write.

- Having no pointers makes it easier to teach, so undergraduate classes are now usually taught in Java, which means that the grad students tend to use Java when they research new tools.

- The Eclipse IDE, being both open source and supported by IBM, makes it a great platform for tool development.

I am just about ready to swear fealty to Java, purely because of the richness of the third-party programming toolset.

Permalink

Posted in Eclipse, programmer productivity at 8:09 pm by ducky

In a previous post, I said that I thought it would be handy to have your source editor color code based on which lines were executed in the prior execution of the code. I mused about merging Eclipse with a profiler in that post, but later realized that I could also use a code completion tool… and then discovered someone had already done it. EclEmma is a fine code coverage tool that is nicely integrated with Eclipse and does exactly what I want.

EclEmma isn’t positioned as a debugging tool, but it sure can be used as one.

Permalink

Posted in programmer productivity at 9:37 am by ducky

From Iris Vessey’s Expertise in Debugging Computer Programs: An Analysis of the Content of Verbal Protocols Systems:

“Experts have more and/or larger knowledge structures in long-term memory, which they build up by the process of chunking. … Chunking refers to the concept whereby humans can effectively increase the capacity of short-term memory by grouping related items into a “chunk,” storing the chunk in long-term memory and the pointer to the chunk in short-term memory. For example, most of us would store KBD as three chunks, while we would store CAT as one since we perceive it as a unit, i.e., as an animal. (See Simon [39].) The importance of knowledge structures to expertise was first established by de Groot [46] and Chase and Simon [22] in their studies of expert and novice chess players. This work has since been replicated in the programming domain by Shneiderman [47] and McKeithen et al. [48].”

This sounds to me like an excellent reason to read Design Patterns.

Permalink

05.20.07

Posted in Hacking, programmer productivity at 12:40 pm by ducky

From the name, I had thought that the Java Modeling Language (JML) was going to be some specialized variant of UML. I haven’t worked with UML, but what I see other people doing with it is drawing pictures.

Instead, JML turns out to be a specification for putting assertions about the code’s behaviour in comments. In that way, it is much more similar to Javadoc than to UML.

With both Javadoc and JML, the author of a method makes promises about what the method will do and what the method requires. In Javadoc, for example, @return int is a promise that the method will return an int, and @param foo int says that the method needs a parameter foo of type int.

With Javadoc, however, the promises are pretty minimal and the requirements are all stated elsewhere (like in the method definition). With JML, the promises about post-conditions and requirements on the pre-conditions can be much more elaborate. The programmer can promise that after the method finishes, for example:

- a particular instance variable will be less than and/or greater than some value

- an output sequence will be sorted in ascending order, or

- variable foo will be bigger than when the method started.

The programmer can also state very detailed input requirements, like that an instance variable can’t be null, that an input must be in a certain range, or that the sum of foo and bar must be less than baz.

This rigorous definition of pre- and post-conditions is useful for documentation. The next programmer doesn’t have to read through the entire method to figure out that foo can’t be null, for example.

Additionally, the JML spec is rigorous enough that it can be used for with a variety of interesting and useful tools. With a special compiler (jmlc), pre- and post-condition checks can get compiled in to the code. Thus if someone calls a method with a parameter outside the allowed bounds, the code can assert an error. (The assertions can also be turned off for production code if so desired.)

But wait, there’s more! The specs are rigorous enough that a lot of checking can be done at compile time. If method A() promises that its output will be greater than 3, and method B() says that it requires the output to be greater than 5, then B(A()) would give a warning: A can give output (between 3 and 5) that B would gag on. See ESC/Java2.

But wait, there’s more! The JML annotations can be used to create unit and tests. The jmlunit writes tests that wiggle the input parameters over the legal inputs to a method, and checks to see if the outputs are correct.

There’s the small problem that it’s a pain to write all the pre-and post-conditions. Fortunately, there’s a tool (Daikon) which will help you with that. Daikon watches as you run a program and sees what changes and what doesn’t change, and from that, generates promises/requirements. Note that those might not be correct. If there are bugs in your program or if that execution of your program didn’t hit all the corner cases, then those won’t be correct. However, it will give you a good start, and I find that it is easier to spot mistakes in somebody else’s stuff than it is to spot omissions in things that I did.

This is all way cool.

Permalink

05.19.07

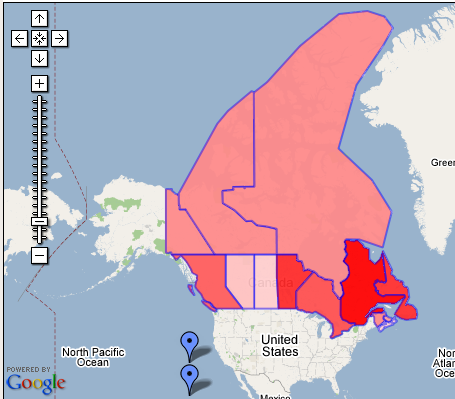

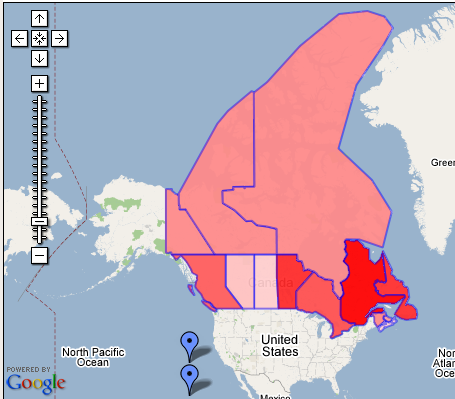

Posted in Hacking, Maps at 8:08 pm by ducky

A year ago, while I was in the midst of working on my Census Maps mashup, my Green College colleague Jana came up to me with a question. “I have a table of data about heat pump emissions savings for each province, and I want to make a map that colors each province based on the savings for that province. What program should I use to do that?”

I thought about all the work that I’d done for the Census Maps mashup — learning the Google Maps API, digging up the shape files for census tract boundaries, downloading and learning how to use the shapelib libraries to process the shapefiles, downloading and learning how to use gd, reacquainting myself with C++, reacquainting myself with gdb, debugging, trying to figure out why certain census tracts looked strange, etc, and rendered her an authoritative response: “Use Photoshop”, I said.

I was really dismayed that I had to tell her to use a paint program. Why should she — a geographer — have to learn about vertices and alpha channels and statically loaded libraries? Why wasn’t there some service where she could feed in a spreadsheet file and get back a map?

Well, I finally got tired of waiting for Google to do it, so developed Mapeteria — my own service for users to generate their own thematic maps.

If you give Mapeteria a CSV file (which is one of the formats that any spreadsheet program will be delighted to save as) plus a little more information about how it should be displayed, it will give you back a map. You can either get a KML file (which you can look at in Google Earth) or a Google Maps mashup that shows the map directly in your web browser.

So Jana, here’s your map!

Permalink

« Previous entries Next Page » Next Page »