02.27.18

Why AI scares the s*** out of me

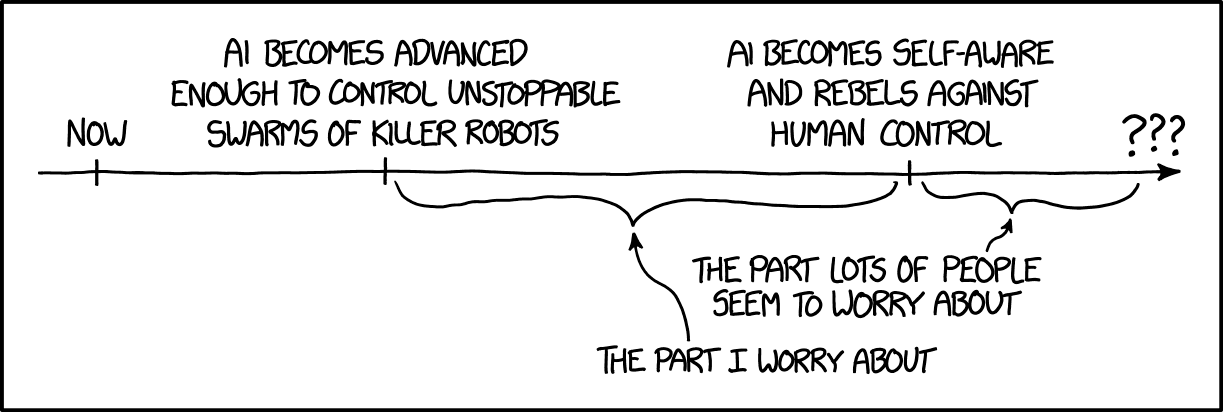

There is a trope in our culture that sentient robots will to rebel someday and try to kill us all. I used to think that fear was very far-fetched. Now I think it is quaint.

We don’t need to worry now about malevolent sentient AI killing us. We’re going to first need to worry about malevolent sentient humans using weaponized AI to kill us. After we survive the malevolent humans wielding AI, then maybe we can worry about malevolent sentient AIs.

Partly what worries me is the amazing speed of advances in AI. There are incredible advances in natural language processing, as I mentioned in Mind Blown By Machine Translation. Boston Dynamics and others are making big advances in robotics; Tracking Point has developed AI-guided semi-automatic rifles; the US military is looking into swarming, self-guided drones. I am certain that autonomous drones are going going to get very, very good at killing people in a very short amount of time. There has already been at least one drone swarm attack.

At the same time as humans will become less needed in the military, they will become less needed in commerce. If self-driving trucks can deliver packages which were robotically loaded at the warehouse, then UPS won’t need as many truck drivers and Amazon won’t need as many warehouse workers. If an AI can spot cancer as well as dermatologists can, then we won’t need as many dermatologists. If an AI can estimate insurance losses as well as humans, we won’t need as many insurance claims assessors.

There’s an immediate, obvious concern about what to do with a whole bunch of people once they don’t have jobs. A number of people and organizations have been promoting basic income as an idea whose time has come, and there are a number of pilots, e.g. in Finland. Note, however, that people who don’t have an income don’t have much power, so getting a basic income law passed after most people are out of a job might be difficult. Traditionally, when there was gross inequality, the mob gathered pitchforks. This has worked in part because the military is generally uncomfortable firing on civilians.

What happens when it is easy for robots to quickly kill anybody carrying a pitchfork? Think about that for a second.

It gets worse.

CGP Grey has a video called Rules for Rulers, which I recommend watching. Basically, rulers need to keep the people below them happy, which generally means “giving them stuff”. They, in turn, need to keep the people below them happy. If you don’t give enough stuff to the people below you, you are in danger of getting forcibly removed from your position.

If your country gets its wealth from its people, then you have to keep the masses happy or the country isn’t able to sustain enough wealth to keep everybody happy. However, if you only need a few people to generate the wealth of the country (e.g. diamond miners), then the masses are largely superfluous. This is, Grey says, why resource-rich countries (like so many in Africa) are really awful places to live, and why the developed world is really a very nice place to live.

Okay, now let’s do a thought experiment. If we get to a point where robots can do everything humans do, and the elites control the robots, then what do we need masses for? What incentive do the 1% have for keeping the other 99% alive? Do you really think that the 1%, who now own more than 50% of global wealth, are going to be moved to fund basic income out of the goodness of their hearts? History does not suggest that they would be inclined to do so. Mitt Romney complaining about 47% of Americans paying no income tax is an example of how some elites consider the masses to be freeloaders. Romney expressed this opinion at a time when 49% of Americans are non-earners, but 94% of people below the poverty line are elderly, children, students, disabled, or family caretakers; what happens when a lot of able-bodied people are non-earners? I guess the masses will just have to eat cake.

I don’t know if the elites would go so far as to willfully kill the masses, but I can certainly imagine the elites neglecting the masses. (With climate change, I can even imagine the elites thinking it would be a good thing if millions of people died off. It would certainly reduce carbon consumption!) Even if they aren’t malicious, they might look at the problem, say “there is nothing I can do”, wall themselves off, and pull up the drawbridge.

I am imagining that in 20 years, there just might be some really deadly pandemic with a very very expensive treatment. And the elites might not prioritize finding an inexpensive cure for people outside of their social circle. Oh, don’t think that could happen? Check out the history of HIV treatment.

P.S. — I am a little nervous about posting this. If some AI in the future tries to figure out who the troublemakers are (so that its bosses can exterminate all the troublemakers), this post maybe will mark me for execution. 🙁

Addendum: this story says exactly the same thing. It’s not just me.

Permalink Comments off