05.09.07

Posted in Hacking, programmer productivity, robobait at 1:50 pm by ducky

I’m starting to look at a bunch of software engineering tools, particularly those that purport to help debugging. One is javaspider, which is a graphical way of inspecting complex objects in Eclipse. It looks interesting, but which has almost no documentation on the Web. I’m not sure that it’s something I would use a lot, but in the spirit of making it easier to find things, here is a link to a brief description of how to use javaspider.

Keywords for the search engines: manual docs instructions javaspider inspect objects Eclipse

Permalink

04.23.07

Posted in Hacking, programmer productivity, Technology trends at 1:31 pm by ducky

I recently watched some people debugging, and it seemed like a much harder task than it should have been. It seems like inside an IDE, you ought to be able to click START LOGGING, fiddle some with the program of interest, click STOP LOGGING, and have it capture information about how many times each method was hit.

Then, to communicate that information to the programmer, change the presentation of the source code. If a routine was never executed, don’t show it in the source (or color it white). If none of a class’ methods or fields were executed, don’t show that class/file. If a method was called over and over again, make it black. If it was hit once, make it grey.

It doesn’t need to be color. You could make frequent classes/methods bigger and infrequent ones smaller. If the classes/methods that were never changed were just not displayed — not visible in the Package Explorer in Eclipse, for example — that would be a big help.

Permalink

04.08.07

Posted in Hacking at 5:02 pm by ducky

I spent all day yesterday working on configuration, and I’m probably going to do the same again today.

I am learning PHP with a hobby project, and my research group is heavily involved in Eclipse. (Mylar came out of my group, for example.) I thus wanted to try out the PHP plug-in for Eclipse. Simple, right?

Ugh.

That meant that I needed PHP4 and MySQL4. (I wanted PHP4 because that’s what my ISP has and what I’d started using. At one point, I upgraded on webfoot.com to PHP5, and everything broke. I didn’t want to try to troubleshoot that while I’m still learning PHP, so I just reverted to PHP4 on webfoot.com, and wanted to match that locally. I wanted MySQL4 because that’s what happened to be installed on webfoot.com for WordPress.)

Addendum: I have since learned of xampp, which has Apache/MySQL/PHP5 already configured together, and MAMP, which is similar (but only for MacOS). I can see why that would be useful. I’m tempted to blow away my entire installation and just download xampp. *Sigh*

PHP4

Downloading PHP4 was simple, since I use Ubuntu. I just used Synaptic, a nice GUI front-end to apt-get, to grab both php4 and php4-cli. The documentation seemed to say that there were only two lines that I really needed to add:

AddType application/x-httpd-php .php .php3 .php4 .phtml

AddType application/x-httpd-php-source .phps

plus I needed to verify that index.php was already in my DirectoryIndex. No problem. I edited /etc/apache2 and off I went. Uh, no go. I searched and searched and searched… and finally noticed that I was editing apache2, but that I also had apache1.3 installed, and apache1.3 was running, not apache2. Editing the config file for apache2 did me no good at getting PHP4 working with apache1.3.

I thought about it for a bit, and decided that it really was time to fully upgrade to apache2. I had stalled on it because twiki used to have some issues with apache2, but those seem resolved. So now I had to configure apache2.

Apache2

Fortunately, I’ve been configuring httpd and its children since 1994, so that went pretty quickly.

MySQL

I then turned my focus to MySQL. I used Synaptics to get the mysql-client-4.1 and mysql-server-4.1 packages. I was a good little girl and tried to follow along in the official docs. Unfortunately, I didn’t notice that the first doc I started reading was the MySQL5 manual, not the MySQL4 manual. (That didn’t take too long to figure out.) Next, I got very confused and worried because the manual talked about what the file layout looked like, and mine looked very different. I finally decided that I shouldn’t worry too much, because the Ubuntu way of doing things is sometimes different. However, when it started saying that I could check the installation by running the scripts in run-bench, then I got really perplexed.

Slocate

My slocate db didn’t have all the files that I just downloaded, so I decided to take a diversion and figure out how to update it. It took a little while to figure it out and do the update, but it was straightforward.

The magic incantation is:

sudo updatedb

Back to MySQL

Unfortunately, after updating the db, run-bench was still nowhere to be found. Somewhat troubled by this, I decided to continue.

I was pretty much dead in the water, however. When I had run mysql_install_db, it had printed out some instructions about setting a password, which I, like a good little girl, dutifully followed. However, the official docs assumed that I had NOT set a password, and so I was unable to follow. They also didn’t tell me how to specify a password (since I didn’t need one since they hadn’t told me to set one), and so what I had been trying was wrong. (Hint: use the -p option and it will prompt you for a password.)

I finally blew away the data directory, then ran mysql_install_db again, and everything worked much better. I could now run mysql and manipulate the databases directly. Yay! However, PHP was still not able to connect to MySQL. Boo!

I did some sleuthing and found some very rude but helpful forum postings that led me to believe that I needed to put mysql.so in the PHP extensions directory. (To find the PHP extensions directory, run phpinfo() in a PHP script, then examine the output for the extensions directory.) Fortunately, slocate found two versions of mysql.so. Unfortunately, they were in a Perl subdirectory and a Python subdirectory — probably not what I wanted. On a whim, I tried making a symbolic link from one of those to the PHP extensions directory. I was just as successful as I expected I’d be — namely not at all.

I took a break, searched the Web, took another break, had some chocolate, and then thought to look through the packages on Synaptic again. There was one called php4-mysql. Bingo! I downloaded it, and MySQL and PHP were now talking to each other.

PHPEclipse plugin

Off to download the PHP Eclipse plugin. Fortunately, all I had to do was go to Help->Software Updates->Find and Install->new->New Remote Site and enter the update URL, http://phpeclipse.sourceforge.net/update/releases. Unfortunately, it wanted Eclipse 3.1. I was hoping that it meant 3.1 or higher, but no, I found out that they really meant Eclipse 3.1, so I had to download 3.1 and try again.

Eclipse 3.1

The 3.1 version of Eclipse is slightly hidden because it is so old, but I was able to find it pretty quickly and download it. I have been downloading and configuring Eclipse a lot lately because of my research lab’s involvement with it, so it was painless for me.

PHPEclipse again

There is a nice step-by-step guide to installing PHPEclipse that informed me that I would need DBG, the PHP Debugger.

DBG

I downloaded the tarball for the dbg modules,then copied the right version (4.4.2) over to the PHP extensions directory.

PHP again

I did a bunch of configuring in PHPEclipse, but got stuck on a configuration page that insisted on knowing my PHP interpreter. I didn’t seem to have one.

Fortunately, I do sometimes learn from my tribulations, and thought to look at Synaptic again. Lo and behold, there was a package php4-cli. I downloaded that, and PHPEclipse running PHP scripts! Hallelujah!

PHPEclipse again

Unfortunately, I couldn’t figure out how to pass the QUERY_STRING to the scripts I was running under Eclipse. I couldn’t figure out how to get them into $_GET, $_POST, or $_REQUEST, try as I might. Eventually, I did a hacky workaround: I wrote a function that first tried $_REQUEST, and if that didn’t work, pulled the variables out of $_ENV. To get them into $_ENV, I set them (one varible and value per line) in Run->Run…->(configuration)->Environment. Note that I had to have the radio button set to “Append environment to native environment”.

So now PHP, MySQL, Apache, PHPEclipse, and DBG are all working together. They might not be in perfect harmony, but they are all at least singing the same song. Yay!

Addendum: not done, back to PHP4

Hah. I thought I was done, but it turns out that the PHP4 that I downloaded via Synaptic didn’t have curl compiled in, which I needed for one of my scripts. Downloading php4-curl made everything happy.

Why is this so hard?

There is a lot of documentation out on the Web on how to configure stuff, but a lot of it is for Windows and the Linux docs tend to assume you are downloading tarballs or RPMs. For example, the alert reader might still be wondering where run-bench got to. The docs finally told me (about three pages later, arg!) that you’d only see run-bench if you downloaded the source, that run-bench wasn’t in the binaries. Arg!

Part of the reason that this was hard is that PHP, MySQL, and Apache are each very complex packages that can be used in several different modes. All three of them can be run entirely independently. PHP can be run with or without curl as well, and probably sixteen other modes that I don’t know about.

Apache, MySQL, and Eclipse are complex enough that they have a fair amount of configuration that they need even if they are being run stand-alone.

More fundamentally, documentation isn’t sexy. 🙁 People don’t write a whole lot of documentation; a lot of my troubleshooting was aided not by formal docs but by blog postings and forum discussions. (That’s why I’m posting this — so the next poor schmoe doesn’t have to spend as long as I did.)

Finally, it’s hard enough to get one project documented well, and I was trying to make FIVE different open source software packages play together. It was actually surprising that I didn’t get caught by holes around the edges more often.

Permalink

03.18.06

Posted in Hacking, Maps at 5:40 pm by ducky

One of the most venerable types of parallel processing is called SIMD, for Single Instruction Multiple Data. In those types of computers, you would do the exact same thing on many different pieces of data (like add two, multiply by five, etc) at the same time. There are some problems that lend themselves to SIMD processing very well. Unfortunately, there are a huge number of problems that do not lend themselves well to SIMD. It’s rare that you want to process every piece of data exactly the same.

Google has done a really neat thing with their architecture and software tools. They have abstracted things such that it looks to the developer like they have a single operation multiple data machine, where an operation can be something relatively complicated.

For example, to create one of my map tiles, I determine the coordinates of the tile, retrieve information about the geometry, retrieve information about the demographics, and draw the tile. With Google tools, once I have a list of tile coordinates, I could send one group of worker-computers (A group) off to retrieve the geometry information and a second (B group) off to retrieve the demographic information. Another group (the C group) could then draw the tiles. (Each worker in the C group would use data from exactly one A worker and one B worker.)

The A and B tasks are pretty simple, and maybe could be done by an old-style SIMD computer, but C’s job is much too complex to do in a SIMD computer. What steps are performed depends entirely on what is in the data. For a tile out at sea, the C worker doesn’t need to draw anything. For a tile in the heart of Los Angeles, it has to draw lots and lots of little polygons. But at this level of abstraction, I can think of “draw the tile” as one operation.

Under the covers, Google is does a lot of work to make it look like everything is beautifully parallel. In reality, there probably aren’t as many workers as tiles, but the Google tools take care of dispatching jobs to workers until all the jobs are finished. To the developer, it all looks really clean and tidy.

There are way more problems that lend themselves to SOMD than to SIMD, so I think this approach has enormous potential.

Permalink

02.17.06

Posted in Hacking, Maps, Technology trends at 2:27 pm by ducky

I was in San Jose when the 1989 Loma Prieta earthquake hit, and I remember that nobody knew what was going on for several days. I have an idea for how to disseminate information better in a disaster, leveraging the power of the Internet and the masses.

I envision a set of maps associated with a disaster: ones for the status of phone, water, natural gas, electricity, sewer, current safety risks, etc. For example, where the phones are working just fine, the phone map shows green. Where the phone system is up, but the lines are overloaded, the phone map shows yellow. Where the phones are completely dead, the phone map shows red. Where the electricity is out, the power map shows red.

To make a report, someone with knowledge — let’s call her Betsy — would go to the disaster site, click on a location, and see a very simple pop-up form asking about phone, water, gas, electricity, etc. She would fill in what she knows about that location, and submit. That information would go to several sets of servers (geographically distributed so that they won’t all go out simultaneously), which would stuff the update in their databases. That information would be used to update the maps: a dot would appear at the location Betsy reported.

How does Betsy connect to the Internet, if there’s a disaster?

- She can move herself out of the disaster area. (Many disasters are highly localized.) Perhaps she was downtown, where the phones were out, and then rode her bicycle home, to where everything was fine. She could report on both downtown and her home. Or maybe Betsy is a pilot and overflew the affected area.

- She could be some place unaffected, but take a message from someone in the disaster area. Sometimes there is intermittent communication available, even in a disaster area. After the earthquake, our phone was up but had a busy signal due to so many people calling out. What you are supposed to do in that situation is to make one phone call to someone out of state, and have them contact everybody else. So I would phone Betsy, give her the information, and have her report the information.

- Internet service, because of its very nature, can be very robust. I’ve heard of occasions where people couldn’t use the phones, but could use the Internet.

One obvious concern is about spam or vandalism. I think Wikipedia has shown that with the right tools, community involvement can keep spam and vandalism at a minimum. There would need to be a way for people to question a report and have that reflected in the map. For example, the dot for the report might become more transparent the more people questioned it.

The disaster site could have many more things on it, depending upon the type of disaster: aerial photographs, geology/hydrology maps, information about locations to get help, information about locations to volunteer help, topology maps (useful in floods), etc.

What would be needed to pull this off?

- At least two servers, preferably at least three, that are geographically separated.

- A big honkin’ database that can be synchronized between the servers.

- Presentation servers, which work at displaying the information. There could be a Google Maps version, a Yahoo Maps version, a Microsoft version, etc.

- A way for the database servers and the presentation servers to talk to each other.

- Some sort of governance structure. Somebody is going to have to make decisions about what information is appropriate for that disaster. (Hydrology maps might not be useful in a fire.) Somebody is going to have to be in communication with the presentation servers to coordinate presenting the information. Somebody is going to have to make final decisions on vandalism. This governance structure could be somebody like the International Red Cross or something like the Wikimedia Foundation.

- Buy-in from various institutions to publicize the site in the event of a disaster. There’s no point in the site existing if nobody knows about it, but if Google, Yahoo, MSN, and AOL all put links to the site when a disaster hit, that would be excellent.

I almost did this project for an MS thesis project, but decided against it, so I’m posting the idea here in the hopes that someone could run with it. I don’t foresee having the time myself.

Permalink

02.16.06

Posted in Hacking, Maps at 9:40 pm by ducky

I spent essentially all day today working on my Google Maps / U.S. Cenus mashups (instead of working on my Parallel Algorithms homework like I should have).

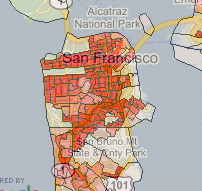

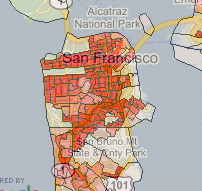

I think it’s pretty cool — I can display a lot of different population-based data overlaid on Google Maps. Here’s a piece of the population density overlay around San Francisco:

You can see Golden Gate park, The Presidio, golf courses, and the commercial district as places where people don’t live.

To the best of my knowledge, this is one of the first area-based Google Maps mashups, and depending on how strict your definition is, it might be the first area-based mashup. (This is opposed to the many point-based mashups, where there are markers at specific points.)

(Addendum: After I wrote the above, someone pointed out area-based satellite reception map. So now I have to say that mine is the probably the first shaded area-based display.)

There might be a good reason why there aren’t many area-based mashups: it’s computationally quite expensive. I’m actually a bit nervous about the possibilities of a success experience. I might really trash my server if a lot of people start banging on it.

I have ideas of how to make it less computationally intensive, including caching data, but it’s not as straightforward as you might think. For example, to precompute and store all of the tiles in the US would take (by my back-of-the-envelope calculation) on the order of 1000G. In expert mode, a user can choose between 36 numerators and 5 denominators for 180 different maps. Furthermore, there are an infinite number of different color mappings that the user could want. That’s more storage space than I want to pay for.

I thought about maybe drawing the polygon information once, and basically filling in the colors later. However, the database lookups are themselves quite intensive. There are 65,677 census tracts in the US. The polygons representing those tracts have 3.5 million vertices between them, but I’m already approximating somewhat: if the pixel area of a tract is too small, I only draw the bounding box of the tract instead of every corner.

I probably want to cache tiles in order of use. That’s pretty simple and straightforward, but I will have to then figure out a caching strategy for getting rid of tiles, or else buy lots and lots and lots of disk space.

Something else that would help a lot would be if people could switch data overlays without losing their lat/long/zoom place. I think I should be able to put links in the sidebar that get dynamically updated whenever the map center changes.

However, all of these things take some time, and I’m getting anxious about how I’ve been neglecting my schoolwork to play with the maps. The maps are more fun.

Permalink

02.10.06

Posted in Hacking at 9:10 pm by ducky

I’m transferring all of my old blog postings into WordPress.

This might take a while, and might be sort of random for a few days…

Permalink

01.30.06

Posted in Hacking at 10:43 pm by ducky

I wanted to keep control over my blog. I wanted to use my own software so that I would have control.

Of course, I never actually got around to finishing setting things up the way I wanted. So I never posted.

I finally decided that keeping tight ownership of non-existant posts wasn’t really buying me much. Besides, it was either set up a new blog or catch up on my studies, so here’s the new one.

Go see the old blog.

Permalink

« Previous Page « Previous Page Next entries »