11.10.20

Posted in Hacking, programmer productivity at 11:55 am by ducky

As mentioned here and here, there is evidence that generating lots of hypotheses helps find the right answer faster.

Hypotheses are a Liability shows that having a hypothesis can make you less able to discover interesting facts about a data set. Very interesting!

Permalink

06.21.20

Posted in Family, Hacking, Married life, programmer productivity, Technology trends at 11:07 am by ducky

After waiting literally decades for the right to-do list manager, I finally broke down and am writing one myself, provisionally called Finilo*. I have no idea how to monetize it, but I don’t care. I am semi-retired and I want it.

I now have a prototype which has the barest, barest feature set and already it has changed my life. In particular, to my surprise, my house has never been cleaner!

Before, there were three options:

- Do a major clean every N days. This is boring, tedious, tiring, and doesn’t take into account that some things need to be cleaned often and some very infrequently. I don’t need to clean the windows very often, but I need to vacuum the kitchen every few days.

- Clean something when I notice that it is dirty. This means that stuff doesn’t get clean until it’s already on the edge of gross.

- Hire someone to clean every N days. This means that someone else gets the boring, tedious, tiring work, but it’s a chore to find and hire someone, you have to arrange for them to be in your space and be somewhat disruptive, and of course it costs money.

Now, with Finilo, it is easy to set up repeating tasks at different tempos. I have Finilo tell me every 12 days to clean the guest-bathroom toilet, every 6 days to vacuum the foyer, every 300 to clean the master bedroom windows, etc.

Because Finilo encourages me to make many small tasks, each of the tasks feels easy to do. I don’t avoid the tasks because they are gross or because the task is daunting. Not only that, but because I now do tasks regularly, I don’t need to do a hyper-meticulous job on any given task. I can do a relatively low-effort job and that’s good enough. If I missed a spot today, enh, I’ll get it next time.

This means that now, vacuuming the foyer or cleaning the toilet is a break — an opportunity to get up from my desk and move around a little — instead of something to avoid. This is much better for my productivity instead of checking Twitter and ratholing for hours. (I realize that if you are not working from home, you can’t go vacuum the foyer after finishing something, but right now, many people are working from home.)

It helps that I told Finilo how long it takes to do each chore. I can decide that I want to take a N minute break, and look at Finilo to see what I task I can do in under that time. It does mean that I ran around with a stopwatch for a few weeks as I did chores, but it was totally worth it. (Cleaning the toilet only takes six minutes. Who knew?)

And this, like I said, is with a really, really early version of Finilo. It’s got a crappy, ugly user interface, it breaks often, I can’t share tasks with my spouse, it’s not smart enough yet to tell me when I am taking on more than I can expect to do in a day, there’s not a mobile version, etc. etc. etc… and I still love it!

*Finilo is an Esperanto word meaning “tool for finishing”.

Permalink

03.12.12

Posted in Eclipse, programmer productivity at 9:57 pm by ducky

Several years ago, I found that differential code coverage was extremely powerful as a debugging tool and made a prototype using EclEmma. I also had some communications with the EclEmma team, and put in a feature request for differential code coverage. Actually, I thought that incremental code coverage would be easier for people to understand and use.

Well, EclEmma 2.1 just came out, and it has incremental code coverage in it! I am really excited by this, and wrote a blog post at my workplace. I have given motivation on this blog before, but I give some more on the Tasktop blog posting, as well as some instructions for which buttons to push to effectively use EclEmma to do incremental code coverage.

Permalink

05.20.09

Posted in Hacking, programmer productivity, robobait, Technology trends at 11:44 am by ducky

I had a very brief but very interesting talk with Prof. Margaret Burnett. She does research on gender and programming. at Oregon State University, but was in town for the International Conference on Software Engineering. She said that many studies have shown that women are — in general — more risk averse than men are. (I’ve also commented on this.) She said that her research found that risk-averse people (most women and some men) are less likely to tinker, to explore, to try out novel features in both tools and languages when programming.

I extrapolate that this means that risk-seeking people (most men and some women) were more likely to have better command of tools, and this ties into something that I’ve been voicing frustration with for some time — there is no instruction on how to use tools in the CS curriculum — but I had never seen it as a gender-bias issue before. I can see how a male universe would think there was no need to explain how to use tools because the figured that the guys would just figure it out on their own. And the most guys might — but most of the women and some of the men might not figure out how to use tools on their own.

In particular, there is no instruction on how to use the debugger: not on what features are available, not on when you should use a debugger vs. not, and none on good debugging strategy. (I’ve commented on that here.) Some of using the debugger is art, true, but there are teachable strategies – practically algorithms — for how to use the debugger to achieve specific ends. (For example, I wrote up how to use the debugger to localize the causes of hangs.)

Full of excitement from Prof. Burnett’s revelations, I went to dinner with a bunch of people connected to the research lab I did my MS research in. All men, of course. I related how Prof. Burnett said that women didn’t tinker, and how this obviously implied to me that CS departments should give some instruction on how to use tools. The guys had a different response: “The departments should teach the women how to tinker.”

That was an unsatisfying response to me, but it took me a while to figure out why. It suggests that the risk-averse pool doesn’t know how to tinker, while in my risk-averse model, it is not appropriate to tinker: one shouldn’t goof off fiddling with stuff that has a risk of not being useful when there is work to do!

(As a concrete example, it has been emotionally very difficult for me to write this blog post today. I think it is important and worthwhile, but I have a little risk-averse agent in my head screaming, screaming at me that I shouldn’t be wasting my time on this: I should be applying for jobs, looking for an immigration lawyer, doing laundry, or working on improving the performance of my maps code. In other words, writing this post is risky behaviour: it takes time for no immediate payoff, and only a low chance of a future payoff. It might also be controversial enough that it upsets people. Doing laundry, however, is a low-risk behaviour: I am guaranteed that it will make my life fractionally better.)

To change the risk-averse population’s behaviour, you would have to change their entire model of risk-reward. I’m not sure that’s possible, but I also think that you shouldn’t want to change the attitude. You want some people to be risk-seeking, as they are the ones who will get you the big wins. However, they will also get you the big losses. The risk-averse people are the ones who provide stability.

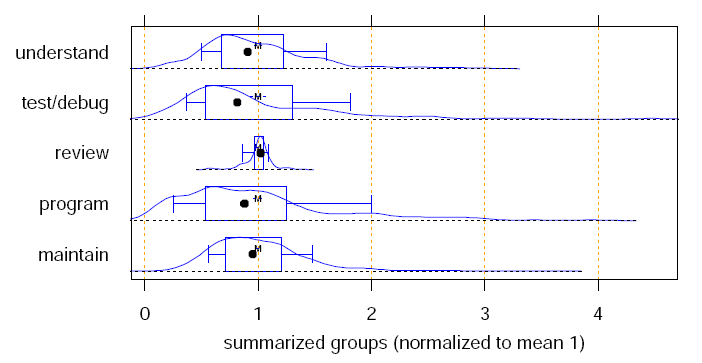

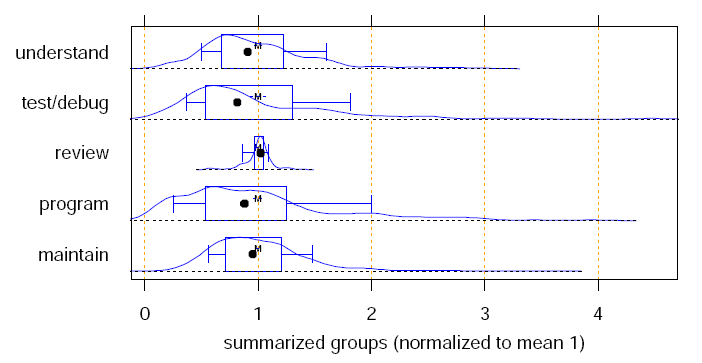

Also note that because there is such asymmetry in task completion time between above-median and below-median, you might expect that a bunch of median programmers are, in the aggregate, more productive than a group at both extremes. (There are limits to how much faster you can get at completing a task, but there are no limits to how much slower you can get.) It might be that risk aversion is a good thing!

There was a study I heard of second-hand (I wish I had a citation — anybody know?) that found that startups with a lot of women (I’m remembering 40%) had much MUCH higher survival rates than ones with lower proportions of women. This makes perfect sense to me; a risk-averse population would rein in the potentially destructive tendencies of a risk-seeking population.

Thus I think it does make sense to provide academic training in how to use tools. This should perhaps be coupled with some propaganda about how it is important to set aside some time in the future to get comfortable with tools. (Perhaps it should be presented as risky to not spend time tinkering with tools!)

UPDATE: There’s an interesting (though all-too-brief!) article that mentions differences in the biochemical responses to risk that men and women produce. It says that men produce adrenaline, which is fun. Women produce acetylcholine, which the article says pretty much makes them want to vomit. That could certainly change one’s reaction to risk..

Permalink

05.04.09

Posted in programmer productivity, Technology trends at 4:22 pm by ducky

Update: it turns out that lots of people have done exactly what I asked for: see Instruction-level Tracing:

Framework & Applications and the OCaml debugger. Cooool! (Thanks DanE!)

In my user studies, programmers used the debugger far less than I had expected. Part of that could perhaps be due to poor training in how to use a debugger — it is rare to get good training in how to use a debugger.

However, I think the answer is simpler than that: it is just plain boring and tedious to use a debugger. One guy did solve a thorny problem by stepping through the debugger, but he had to press “step over” or “step into” ninety times.

And when you are stepping, you must pay attention. You can’t let your mind wander, or you will miss the event you are watching for. I can’t be the only person who has done step, step, step, step, step, step, step, boom, “oh crap, where was I in the previous step?”

Omniscient debuggers are one way to make it less tedious. Run the code until it goes boom, then back up. Unfortunately, omniscient debuggers capture so much information that it becomes technically difficult to store/manage it all.

I suggest a compromise: store the last N contexts — enough to examine the state of variables back N levels, and to replay if desired.

I can imagine two different ways of doing this. In the first, the user still has to press step step step; the debugger saves only the state changes between the lines that the user lands on. In other words, if you step over the foo() method, the debugger only notes any state differences between entering and exiting the foo() method, not any state that is local to foo(). If the user steps into foo(), then it logs state changes inside foo().

In the other method, the user executes the program, and the debugger logs ALL the state changes (including in foo(), including calls to HashTable.add(), etc.). This is probably easier on the user, but probably slower to execute and requires more storage.

You could also do something where you checkpoint the state every M steps. Thus, if you get to the boom-spot and want to know where variable someVariable was set, but it didn’t change in the past N steps, you can

- look at all your old checkpoints

- see which two checkpoints someVariable changed between

- rewind to the earlier of the two checkpoints

- set a watchpoint on someVariable

- run until the watchpoint.

Permalink

11.03.08

Posted in programmer productivity at 10:17 am by ducky

Lutz Prechelt wrote a technical report way back in 1999 that did a more rigorous, mathematical analysis of the variance in the time it takes programmers to complete one task. He finds that the distribution is wickedly skewed to the left, and the difference between the top and kinda-normal programmers is about 2. It’s nice to find supporting evidence for what I’d reported earlier. Here’s the money graph:

Time to complete a task probability distribution

This graph shows the probability density — which is very much like a histogram — of the time it took people to do programming-related tasks. As this combines data from many many studies, he normalized all the studies so that the mean time-to-complete was 1.

Prechelt notes that the Sackman paper — which is the origin of the 28:1 figure that many people like to quote — has a number of issues. Mostly Prechelt covered Dickey’s objections, but he also notes that when the Sackman study was done in 1967, computer science education was much less homogenous than it is now, so you might expect a much wider variation anyway.

Evidence against my theses

As long as I’m mentioning something that came up that supports my stance, I should mention two things that counter arguments that I have made.

1. When I’m talking to people, I frequently say that it’s far more important to not hire the loser than to hire the star. My husband points out that the type of task that user studies have their users do are all easy tasks, and that some tasks are so difficult that only the star programmers can do them. This is true, and a valid point. Maybe you need one rock star on your team.

2. I corresponded briefly with my old buddy Ed Burns, the author of Secrets of the Rock Star Programmers. He is of the opinion that the rock star programmers are about 10x more productive than “normal” programmers. Interestingly, he thinks the difference is mastery of tools. I think this is good news for would-be programming stars: tools mastery is a thoroughly learnable skill.

Permalink

10.20.08

Posted in Canadian life, Eclipse, Hacking, programmer productivity at 3:28 pm by ducky

I am looking for a job. If you know anybody in the Vancouver area who is looking for a really good hire, point them at this blog posting or send them to my resume.

Ideally, I’d like a intermediate (possibly junior, let’s talk) Java software development position, preferably where I could become an expert in Java-based web applications. (Java because I think it has the best long-term prospects. My second choice would be a Ruby position, as it looks like Ruby has long-term staying power as well.) I believe that I would advance quickly, as I have very broad experience with many languages and environments.

Development is only partially about coding, and I am very, very strong in the secondary skills. While studying programmer productivity for my MSCS. I uncovered a few unknown or poorly known, broadlly applicable, teachable skills that will improve programmer productivity. Hire me, and not only will you get a good coder, I make the coders around me better.

I am particularly good at seeing how to solve users’ problems, sometimes problems that they themselves do not see. I am good both at interaction design, at seeing the possibilities in a new technology, and seeing how to combine the two. I have won a few awards for web sites I developed.

I also have really good communication skills. I’ve written books, I blog, and I’ve written a number of articles. I even have significant on-camera television experience.

If you want a really low-stress way of checking me out, invite me to give a talk on programmer productivity. (I like giving the talk, and am happy to give it to basically any group of developers, basically any time, any where in the BC Lower Mainland. Outside of the Lower Mainland, you have to pay me for the travel time.)

Do you know of any developer opportunities that I should check out? Let me know at ducky at webfoot dot com.

Permalink

09.10.08

Posted in Eclipse, Hacking, programmer productivity at 6:41 pm by ducky

In the observations of professional programmers that I did for my thesis, I frequently saw them get sidetracked because they didn’t have good information about whether the code they were looking at was executed as part of the feature they were exploring. I theorized that being able to look at the differences in code coverage between two runs — one where they exercised the feature and one where they didn’t — would be useful. Being able to see which methods executed in the first but not the second would hopefully help developers focus in quickly on the methods that were important to their task.

In my last month at UBC, I whipped up a prototype, an Eclipse plug-in called Tripoli, and ran a quickie user study. The differential code coverage information really does make a big difference. You can read a detailed account in UBC Technical Report TR-2008-14, but basically graduate students using Tripoli were frequently more successful than professional programmers who didn’t use Tripoli.

As an example, in the Size task, the subject needed to find where a pane was resized. That was hard. There is no menu item or key stroke invocation for developers to use a starting point. They also didn’t know what the pane was called: was it a canvas, a pane, a panel, a frame, a drawing, or a view? Between pilot subjects and actual subjects, at least eleven people tried and failed to find a method that was always called when a pane was resized and never called when it wasn’t. I even spent a few hours trying to figure out where the pane was resized and couldn’t.

As soon as I got Tripoli working, I tried the Size task, and in my first diff, I saw ten methods, including one named endResizingFrame(). And yes, it turned out to be the right spot. I realized that most of the methods came from not hovering over the corner of the frame such that the cursor changed into the grab handle, and reran. This time I got exactly one method: endResizingFrame(). Wow. The graduate students in my second study were also all able to find endResizingFrame() by using Tripoli.

Tripoli does take some getting used to. Even as the author, it took me a while before I consistently remembered right away that I could use Tripoli on my problems. I’ve also clearly gotten better over time at figuring out what to do in my second run to ensure that the “diff” has very few methods in it.

If you want to try Tripoli out, I’ve posted it online. Just remember it’s a prototype.

Permalink

07.29.08

Posted in Hacking, programmer productivity, Technology trends, Uncategorized at 11:01 am by ducky

There’s a cool paper on a tool to do semi-automatic debugging: Triage: diagnosing production run failures at the user’s site. While Triage was designed to diagnose bugs at a customer site (where the software developers don’t have access to either the configuration or the data), I think a similar tool would be very valuable even for debugging in-house.

They use a number of different techniques to debug C++ code.

- Checkpoint the code at a number of steps.

- Attempt to reproduce the bug. This tells whether it is deterministic or not.

- Analyzes the memory by walking the heap and stack to find possible corruptions.

- Roll back to previous checkpoints and rerun, looking for buffer overflows, dangling pointers, double frees, data races, semantic bugs, etc.

- Fuzz the inputs: intentionally vary the inputs, thread scheduling, memory layouts, signal delivery, and even control flows and memory states to narrow the conditions that trigger the failure for easy reproduction

- Compare the code paths from failing replays and non-failing replays to determine what code was involved in that failure.

- Generate a report. This gives information on the failure and a suggestion of which lines to look at to fix it.

They did a user study and found that programmers took 45% less time to debug when they used Triage than when they didn’t for “real” bugs, and 18% for “toy” bugs. (“…although Triage still helped, the effect was not as large since the toy bugs are very simple and straightforward to diagnose even without Triage.”)

It looks like the subjects were given the Triage bug reports before they started work, so the time that it takes to run Triage wasn’t factored into the time it took. The time it took Triage to run was significant (up to 64 min for one of the bugs), but presumably the Triage run would be done in background. I could set up Triage to run while I went to lunch, for example.

This looks cool.

Permalink

07.24.08

Posted in programmer productivity at 5:45 pm by ducky

I have finished my MS thesis, Path Exploration during Code Navigation!

Research summary

Here is a summary of what I learned during my two years of research; the thesis covers most but not all of the following:

I started out at UBC asking what good programmers do that bad programmers don’t. That raises the question, what is “good”? Good clearly has a time-to-complete component, but also a quality component. I looked around and couldn’t find a worthwhile quality measure, alas, so settled for looking for speed measures. I found some, talked about them on my blog, and summarized them in the first part of my VanDev talk. The big take-away for programmers is “Don’t get stuck!” (Note: this part is not written up in my thesis.)

Don’t get stuck!

How do you not get stuck? The literature seemed to imply that less-effective problem solvers in a domain (not just CS) tend to stick to one hypothesis for way too long, only abandoning it when they hit a dead end. The literature sure seemed to say that a shallower search over more paths (hypotheses) was better than searching one path more deeply. In CS lingo, a more breadth-first search (BFS) apparently is more effective than a more depth-first search (DFS).

Confirmation bias

This is consistent with a large body of research in Psychology about confirmation bias. If you have one hypothesis in your head, you will tend to over-believe evidence that agrees with that hypothesis and under-believe evidence that does not agree with that hypothesis. (For example, if you believed that Saddam Hussein had weapons of mass destruction and was trying to get more, you’d tend to believe reports that Hussein was trying to buy yellowcake from Niger and discount reports by UN weapons inspectors that he did not have WMDs.) There was a really neat paper Dual Space Search During Scientific Reasoning which found that giving people a few minutes dedicated to coming up with as many hypotheses as they could meant that they solved a specific problem much, much faster than a control group that started problem-solving immediately.

If you think of exploration paths as hypotheses of the form, “If I explore down this path, I will find what I’m looking for”, then this says that you would want to keep a few exploration paths in play at a time. You wouldn’t want to try to explore them all simultaneously, but you’d want them in the back of your mind to keep you from the trap of confirmation bias.

Tab support for Breadth-first-search

I noticed that Firefox tabs were much better at helping me keep track of different exploration paths than Eclipse tabs did. Firefox lets me open a bunch of search results in new tabs — putting those “hypotheses” in the back of my mind — and then, once I’ve opened all the search results I’m interested in, explore each in turn. Eclipse opens every file in a new tab, which doesn’t help you keep track of exploration paths. (Imagine if Firefox opened every Web page in a new tab. That would suck.)

User study

Armed with papers that suggest that breadth-first-search (BFS) was better than depth-first-search (DFS), I made a modified version of Eclipse, called Weta (for WEblike TAbbing), so that it had Firefox-style tabbing, then ran a user study. I specifically wanted to see if a more BFS-ish approach would help, I set up a user study like this:

- The subjects did two programming tasks with stock Eclipse.

- I told them about the research that said that BFS was better.

- I showed them how to do a more BFS-ish navigation with Weta

- The subjects did two tasks with Weta.

Most of the subjects pretty much loved the idea of Weta, but none of them ever used Weta to keep track of multiple exploration paths, different branches in the exploration tree. They used Weta, but they used it to mark places on the main trunk for them to come back to later. They’d open the declaration of an element in a new tab, immediately switch to the new tab, and continue down that path.

Why didn’t they use BFS?

Why didn’t they use BFS in the way that I had trained them? Several possibilities:

- Time? Maybe they didn’t have enough time to get used to using Weta. After using Eclipse for years, maybe two twenty-minute tasks just didn’t give them enough time to adjust to a new way of doing things.

- Complexity? Maybe the cognitive load of navigating code is so high that the cost of switching paths is high enough that switching frequently isn’t worth it. Maybe Web navigation is easy enough that the switching cost is low enough that BFS is worth it.

- In twenty lines of a Web page, you probably will only have two or three links to other Web pages, but in twenty lines of Java source you will probably have twenty or thirty Java elements that all have relationships to other Java elements.

- Web pages only have one kind of link; Java elements have multiple kinds of relationships to each other (calls, is called by, inherits, and implements).

- Code is harder to read than Web pages (assuming that you read the language the page is in). You don’t have to worry about conditionals and exceptions in Web pages.

- Confirmation bias, not BFSness? Maybe what is really important is that people not have confirmation bias, not that they use a particular strategy. Maybe just writing down three ideas for what is the root of the problem would be simpler and would require less effort.

- Bookmarks? Maybe developers wanted/needed bookmarks even more than they wanted BFS tools. In the Web navigation literature, the code navigation literature, and casual conversations with my friends and colleagues, I kept hearing that the list of bookmarks gets so long that it becomes unwieldy.

- Mylyn is an Eclipse plug-in that is all about hiding information that you don’t need, so I asked the Mylyn team to hide all the bookmarks except those that were set during the currently-active Mylyn Task. They did, and they actually finished before my user study. Unfortunately, I didn’t realize the possible importance of bookmarks on my study, so didn’t bring it up. That feature of Mylyn was new enough that even the four regular Mylyn users in my study didn’t know about it.)

It would be interesting to see what people did if they had Weta for a longer term, and also to see what they do with bookmarks if given the Mylyn bookmark enhancement.

Other factors affecting getting stuck

While it wasn’t what the study was designed for, I noticed several things that multiple subjects had trouble with. One type of difficulty was Eclipse-specific, and one was not.

- Search. The Java Search dialog and the Find dialog both tripped people up, especially the Java Search dialog. I put in an enhancement request for a better UI for the Java Search dialog that has been assigned (i.e., the Eclipse team will probably do it). I also put in a request for a better Find dialog, but they indicated that they won’t fix it. 🙁

- Lack of runtime information. Static tracing frequently took the users them to different places than the program actually went. I give a handful of examples in the writeup, but here’s one:

- In one of the tasks, users needed to do something with code that involved a GUI element that had the text “Active View Size” in it. They all did a search for “Active View Size” and went to a method in the class DrawApplication. They then did static tracing inside DrawApplication, following relationships from Java elements to other elements. It pretty much kept them inside the class DrawApplication. However, DrawApplication was a superclass of a superclass of the class that was actually run when reproducing the bug! Three of the seven subjects never noticed that.

I think the discrepancy between runtime behaviour and static tracing is important. Subjects spent a lot of time trying to figure out what was executed, or went on wild goose chases because they got the wrong idea about how the code executed.

Suggestions

In addition to the little suggestions I had about the Java Search Dialog and the Find Dialog, I suggest that the IDE be enhanced to give developers visual information about what code is involved in the reproduction of a bug. I’ve talked about this wonder tool before, but I think I can be more concise here:

- Let the developer set things similar to breakpoints that mark which code that is broken during a run. Set a “start logging” breakpoint before things get messed up (or at the start of the code by default); set a “stop logging” breakpoint after things are known to be messed up.

- Colour the source code background based on whether the messed-up code was fully, partially, or not executed. (EclEmma already does this, but does it for the entire run, not for selected sections of the program execution.)

- Use Mylyn to (optionally) hide the Java elements (classes, methods, interfaces) that were not executed at all during the messed-up section of code. Now instead of having to search through maybe three million lines of code to understand the bug, they’d only have to search through maybe three hundred lines.

If you want more details, please see the full thesis, comment here, or shoot me an email message (ducky at webfoot dot com).

Permalink

« Previous entries Next Page » Next Page »