11.30.08

Programming persistence

Warning: this is a long and geeky post.

From time to time in the past few years, I have mentioned that I was a little puzzled as to why more people didn’t render tiles on-the-fly for Google Maps, as I do in my U.S. Census Bureau/Google Maps mashup.

I have reappraised my attitude. I have been redoing my mapping framework to make it easier to use. I have reminded myself of all the hurdles I had to overcome, and discovered a number of annoying new ones.

First pass

I originally wrote my mapping framework in an extreme hurry. It was a term project, and a month before the end of the term, I realized that it would be good for personal reasons to hand it in a week early. The code functioned well enough to get me an A+, but I cut a huge number of corners.

Language/libraries/database choice

It was very important to minimize risk, so I wrote the framework in C++. I would have liked to use a scripting language, but I knew that I would need to use a graphics library and a library to interpret shapefiles. The only ones I found that looked reasonable were C-based libraries (Frank Warmerdam’s Shapelib library andThomas Boutell’s gd library). I knew it was possible using a tool called SWIG, but I hadn’t ever used SWIG and had heard that it was touchy. Doing it in C++ was guaranteed to be painful, but I knew what the limits of that pain were. I didn’t know what the limits of pain of using SWIG would be.

Projection

I also had problems figuring out how to convert from latitude/longitude to pixel coordinates in the Google tile space. At the time (December 2005), I had a hard time simply finding out what the mathematics of the Mercator transformation were. (It is easier to find Mercator projection information now.) I was able to figure out something that worked most of the time, but if you zoomed out past a certain level, there would be a consistent error in the y-coordinates. The more you zoomed out, the bigger the error. I’m pretty sure it’s some sort of rounding error. I looked at it several times, trying to figure out where I could possibly have a roundoff error, but never did figure it out. I just restricted how far people could zoom out. (It also took a very long time to render tiles if you were way zoomed out, so it seemed reasonable to restrict it.)

Polygon intersection

I remember that I spent quite a lot of time on my polygon intersection code. I believe that I looked around the Web and didn’t find any helpful code, so developed it from scratch on little sleep. (Remember, I was doing this in a frantic, frantic hurry.) I ended up with eight comparisons that needed to be done for each polygon in the database for every tile. More on this later.

Rendering bug

The version I handed in had a bug where horizontal lines would show up at the bottom of tiles frequently, as you can see in the bottom left tile:

It was pretty obvious that the bug was my fault, as gd is a very mature and well-used graphics library. My old office partner Carlos Pero had used it way back in 1994 to develop Carlos’ Coloring Book, so it was clear to me that the problem was my fault.

After I handed in my project, I spent quite a lot of time going through my code trying to figure out where the problem was with no luck. Frustrated, I downloaded and built gd so that I could put breakpoints into the gd code. Much to my surprise, I discovered that the bug was in the gd library! I thus had to study and understand the gd code, fix it, report the bug (and patch), and of course blog about it so that other people wouldn’t have the same problem.

Pointing my code to the fixed gd

Then, in order to actually get the fix, I had to figure out how to statically link gd into my binaries. I like my ISP (Dreamhost) and wasn’t particularly interested in changing, but that meant I couldn’t use the system-installed gd libraries. Statically linking wasn’t a huge deal, but it took me at least several hours to figure out which flag to insert where in my makefile to get it to build statically. It was just one more thing.

Second pass

I have graduated, but haven’t found a job yet, so I decided to revamp my mapping framework. In addition to the aesthetic joy of making nice clean code:

- It would be an opportunity to learn and demonstrate competence in another technology.

- I had ideas for how I could improve the performance by pre-computing some things.

- With a more flexible framework, I would be able to do some cool new mashups that I figured would get me more exposure, and hence lead to some consulting jobs.

Language/libraries/database choice

Vancouver is a PHP town, so I thought I’d give PHP a shot. I expected that I might have to rewrite my code in C++ eventually, but that I could get the basics of my improved algorithms shaken out first. (I’m not done yet, but so far, I have been very very pleased with that strategy.)

I also decided to use MySQL. While the feeling in the GIS community is that the Postgres‘ GIS extensions (PostGIS) are better than the GIS extensions to MySQL, I can’t run Postgres on my ISP, and MySQL is used more than Postgres.

I had installed PHP4 and MySQL 4 on my home computer some time ago, when I was working on Mapeteria. However, I recently upgraded my home Ubuntu installation to Hardy Heron, and PHP4 was no longer supported. That meant I need to install a variety of packages, and I went through a process of downloading, trying, discovering I was missing a package, downloading/installing, discovering I was missing a package, lather, rinse, repeat. I needed to install mysql-server-5.0, mysql-client-5.0, php5, php5-mcrypt, php5-cli, php5-gd, libgd2-xpm-dev, php5-mysql, and php5-curl. I also spent some time trying to figure out why php5 wouldn’t run scripts that were in my cgi-bin directory before realizing/discovering that with mod_php, it was supposed to run from everywhere but the cgi-bin directory.

Note that I could have done all my development on my ISP’s machines, but that seemed clunky. I knew I’d want to be able to develop offline at some point, so wanted to get it done sooner rather than later. It’s also a little faster to develop on my local system.

I did a little bit of looking around for a graphics library, but stopped when I found that PHP had hooks to the gd library. I knew that if gd had not yet incorporated my horizontal lines bug fix, then I might have to drop back to C++ in order to link in “my” gd, but I figured I could worry about that later.

Projection

I made a conscious decision to write my Mercator conversion code from scratch, without looking at my C++ code. I did this because I didn’t want to be influenced in a way that might lead me to get the same error at way-zoomed-out that I did before. I was able to find equations on the Wikipedia Mercator page for transforming Mercator coordinates to X-Y coordinates, but those equations didn’t give a scale for the X-Y coordinates! It took some trial and error to sort that out.

Data

For the initial development, I decided to use country boundaries instead of census tract boundaries. The code wouldn’t care which data it was using, and it would be nice to have tiles that would render faster when way-zoomed-out. I whipped up a script read a KML file with country boundaries (that I got from Valery Hronusov and used in my Mapeteria project) and loaded it into MySQL. Unfortunately, I had real problems with precision. I don’t remember whether it was PHP or MySQL, but I kept losing some precision in the latitude and longitude when I read and uploaded it. I eventually converted to uploading integers that were 1,000,000 times the latitude and longitude, and so had no rounding difficulties.

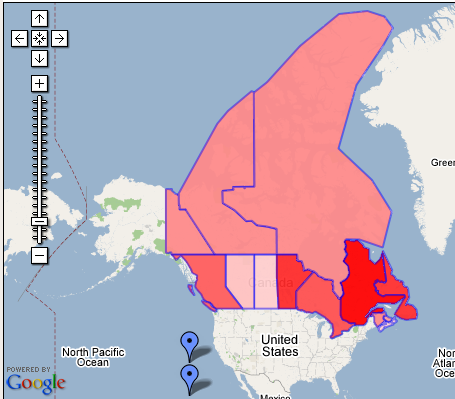

One thing that helped me enormously when working on the projection algorithm was to gather actual data via Google. I found a number of places on the Google maps where three territories (e.g. British Columbia, Alberta, and Montana) came together. I would determine the latitude/longitude of those points, then figure out what the tile coordinates, pixel X, and pixel Y of that point were for various zoom levels. That let me assemble high-quality test cases, which were absolutely essential in figuring out what the transformation algorithm should be, but it was very slow, boring, and tedious to collect that data.

Polygon intersection

When it came time to implement my polygon bounding box intersection code again, I looked at my old polygon intersection code again, saw that it took eight comparisons, and thought to myself, “That can’t be right!” Indeed, it took me very little time to come up with a version with only four comparisons, (and was now able to find sources on the Web that describe that algorithm).

Stored procedures

One thing that I saw regularly in employment ads was a request for use of stored procedures, which became available with MySQL 5. It seemed reasonable that using a stored procedure to calculate the bounding box intersection would be even faster, so I ran some timing tests. In one, I used PHP to generate a complex WHERE clause string from eight values; in the other, I passed eight values to a stored procedure and used that in the WHERE clause. Much to my suprise, it took almost 20 times more time to use the stored procedure! I think I understand why, but it was interesting to discover that it was not always faster.

GIS extensions

My beloved husband had been harping on me to use the built-in GIS extensions. I had been ignoring him because a large part of the point of this exercise was to learn more about MySQL, including stored procedures, but now that I found that the stored procedure was slow, it was time to time the built-in bounding box intersection routine. If I stored the bounding box as a POLYGON type instead of as two coordinate pairs, then calculating the intersection took half the time. Woot!

Rendering

I discovered that despite my having reported the horizontal lines bug fifteen months ago, the gd team hasn’t done anything with it yet. Needless to say, this means that the version of libgd.a on Dreamhost has the bug in it. I thought about porting back to C++. I figured that porting back would probably take at minimum a week, and would raise the possibility of nasty pointer bugs, so it was worth spending a few days trying to get PHP to use my version of gd.

It is possible to compile your own version of PHP and use it, though it means using the CGI version of PHP instead of mod_php. I looked around for information on how to do that, and found a Dreamhost page on how to do so.. but failed utterly when I followed the directions. I almost gave up at that point, but sent a detailed message to Dreamhost customer support explaining what I was trying to do, why, and what was blocking me. On US Thanksgiving Day, I got a very thoughtful response back from Robert at Dreamhost customer support which pointed me at a different how-to-compile-PHP-on-Dreamhost page that ultimately proved successful. (This is part of why I like Dreamhost and don’t really want to change ISPs.)

Compiling unfamiliar packages can be a real pain, and this was no different. The Dreamhost page (on their user-generated wiki) had a few scripts that would do the install/build for me, but they weren’t the whole story. Each of the scripts downloaded a number of projects (like openSSL, IMAP, CURL, etc) in compressed form, extracted the files, and built them. The scripts were somewhat fragile — they would just break if something didn’t work right. They were sometimes opaque — they didn’t always print an error message if something broke. If there was a problem, they started over from the beginning, removing everything that had been downloaded and extracted. Something small — like if the mirror site for mcrypt was so busy that the download timed out — would mean starting from scratch. (I ended up iteratively commenting out large swaths of the scripts so that I wouldn’t have to redo work.)

There was some problem with the IMAP build having to do with SSL. I finally changed one of the flags so that IMAP built without SSL — figuring that I was unlikely to be using this instance of PHP to do IMAP, let alone IMAP with SSL — but it took several false starts, each taking quite a long time to go through.

Finally, once I got it to build without my custom gd, I tried folding in my gd. I uploaded my gd/.libs directory, but that wasn’t enough — it wanted the gd.h file. I suppose I could have tried to figure out what it wanted, where it wanted it, but I figured it would be faster to just re-build gd on my Dreamhost account, then do a make install to some local directory. Uploading my source was fast and the build was slow but straightforward. However, I couldn’t figure out how to specify where the install should go. The makefiles were all autogenerated and very difficult to follow. I tried to figure out where in configure the install directory got set, but that too was hard to decipher. Finally, I just hand-edited the default installation directory. So there. That worked. PHP built!

Unfortunately, it wouldn’t run. It turned out that the installation script had a bug in it:

cp ${INSTALLDIR}/bin/php ${HOME}/${DOMAIN}/cgi-bin/php.cgi

instead of

cp ${INSTALLDIR}/bin/php.cgi ${HOME}/${DOMAIN}/cgi-bin/php.cgi

But finally, after all that, success!

Bottom line

So let me review what it took to get to tile rendering on the second pass:

- Choose a database and figure out how to extract data from it, requiring reading and learning.

- Find and load boundary information into the database, requiring trial and error.

- Choose a graphics library and figure out how to draw coloured polygons with it, requiring reading and learning.

- Gather test cases for converting from latitude/longitude into Google coordinate system, requiring patience. A lot of patience.

- Figure out how to translate from latitude/longitude pairs into the Google coordinate system, requiring algorithmic skills.

- Diagnose and fix a bug in a large-ish C graphics library, requiring skill debugging in C.

- Download and install PHP and MySQL, requiring system administration skills.

- Figure out how to build a custom PHP, requiring understanding of bash scripts and makefiles.

So now, I guess it isn’t that easy to generate tiles!

Note: there is an entirely different ecosystem for generating tiles, one that comes from the mainline GIS world, one that descends from the ESRI ecosystem. I expect that I could have used PostGIS and GeoTools with uDig look like fine tools, but they are complex tools with many many features. Had I gone that route, I would have had to wade through a lot of documentation of features I didn’t care about. (I also would have had to figure out which ISP to move to in order to get Postgres.) I think that it would have taken me long enough to learn / install that ecosystem’s tools that it wouldn’t have been worth it for the relatively simple things that I needed to do. Your milage may vary.